Sound

Contents

Overview

Computer Science Tutor

https://www.youtube.com/watch?v=HlOTuCFtuV8&list=PL04uZ7242_M6O_6ITD6ncf7EonVHyBeCm&index=9

Converting Analogue to Digital Sound

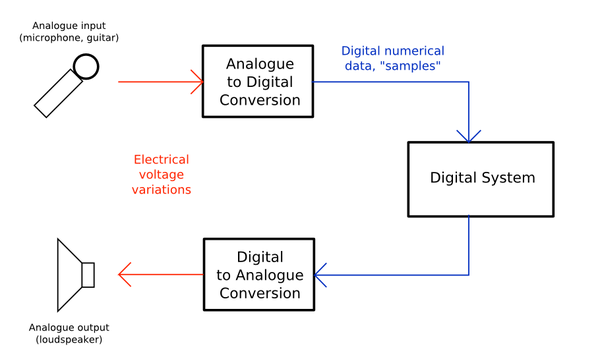

The following image demonstrates how sound files are created when they are inputted through devices such as a microphone:

Sampling Process

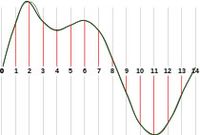

Pulse Amplitude Modulation (PAM) is the process which samples analogue signals and produces electrical pulses of height proportional to the signal's original amplitude.

Pulse Code Modulation (PCM) is the process of coding sampled analogue signals by recording the height of each sample in binary. PCM pulses can then be encoded in binary form.

Further Explanation

https://www.youtube.com/watch?v=VyqGzUbTphs&list=PLCiOXwirraUA69WUAMYyFicC5qbQ4PGc4&index=1

Representing Sound

To store sound, a digitizer is needed to convert the analogue sound into digital sound. An analogue to digital converter carries out these conversions. 16 bit ADC is enough for CD quality sound. For High-Res audio, you can get audio files that are at 24 bit.

Sampling Rate

The sampling rate or frequency is the number of samples taken per second. It is measured in hertz (Hz). The higher the sampling rate the more accurate the representation of the sound. But the higher the sampling rate, the bigger the file size will be as there will be more data the is being stored

Sampling Resolution

The sampling resolution is the number of bits assigned to each sample. The number of bits assigned allows for a wider range of sounds to be displayed. For example, if only one bit is used it can only be either 1 or 0, giving an option of only two different sounds, where as if 8 bits are used there are a possible 255 different sounds that can be recorded and then replayed. In a way, your PC bleeper speaker is one bit as it only produces one tone when there is a error on your computer and then your actual speakers that you listen to music to will be 8 bit sound as you can play 255 different sounds on it.

Additional Video

https://www.youtube.com/watch?v=v7RwEnirp7I&list=PLCiOXwirraUA69WUAMYyFicC5qbQ4PGc4

Calculating File Size

A sound is sampled at 5kHz (5000 Hz) and the sample resolution is 16 bits:

- There is 5000 samples per second & each sample is 2 byte (16 bits)

- 1 second of sound will be 5000 x 2 = 10,000 Bytes

- 10 seconds of sound will be 5000 x 20 = 100,000 Bytes

https://www.youtube.com/watch?v=al5HsKIRhQw&list=PLCiOXwirraUA69WUAMYyFicC5qbQ4PGc4

Nyquist's Theorem

In 1928 Harry Nyquist found that in order to sample any sound at a similar quality to how it is in real life, you must use a sampling rate at double the frequency of the original sound. The result will be the closest possible to the original sound, because recording at double the frequency allows for all of the changes in the sound such as pitch to be captured digitally at a high quality.

MIDI Data

MIDI stands for Musical Instrument Digital Interface.

MIDI does a completely different approach to other sound recording methods. Instead of recording sound and then storing it in a digital format, It actually writes out a set of instructions which can be used to synthesize the sound that is listened to. This relies solely on pre-recorded digital samples and synthesized samples of sounds created by different instruments.

The advantages of using MIDI instead of conventional recording methods is that firstly, it is a fraction of the size of a digital recorded version, it is easily edited or manipulated and notes can be passed to a different instrument and so the attributes of each note can be altered.

https://www.youtube.com/watch?v=AvqP3BhO0d8&list=PLCiOXwirraUA69WUAMYyFicC5qbQ4PGc4

Audio Compression

Audio compression is used to lower the storage space that a digital sound file takes up by removing sounds that humans won't be able to pick up listening to it. For example, in a song with guitar and drums, when there is a loud drum hit, the guitar will often be drowned out and could even become completely inaudible, so this data is removed to reduce the size of the audio file so it takes up less storage space, which is useful for devices like phones with a limited storage space. It is also useful for streaming music as it reduces the chance of the music buffering by reducing the amount of data which needs to be sent allowing for smoother more consistent listening.

Audio Streaming

Audio streaming is a way in which audio/sound is broadcast to a user. It allows a user to listen to an audio file or watch a video without downloading the whole file, they only download a bit at a time... Which is the buffer.

Audio Streaming has a delayed start in order to allow it to buffer, it also plays sound from the buffer and has seamless play unless connection to the host is lost. Audio Streaming saves space on the hard drive since the file is no longer required to be downloaded on the device for it be viewed, Which also means piracy is reduced.

Audio streaming is so widely used and respected because of its usefulness. Before Audio Streaming was around, it could take you quite a while to listen to music/sound since you would have to download the whole file. Whereas Audio Streaming simply gives you the data that you need at that specific time and downloads the next when you need it. It does this simply by holding the data in the buffer.

Synthesising Sound

Sound can be synthesised with MIDI (Musical Instrument Digital Interface), which records information about each note - such as duration, pitch, tempo, instrument and volume - and recreates that note when played. When using MIDI it is hard to replicate the proper sound as it would have to be played through note by note and would be a synthetic sound, in some cases it's good like in pop music but in other cases usually not. A MIDI link can hold up to 16 channels of information which can be routed to a seperate device for each channel.